State laws are putting AI in the driver's seat

Scharfsinn // Shutterstock

State laws are putting AI in the driver’s seat

A person relaxing in their self-driving car.

Imagine a world where no one loses their life because of a driver distracted by their phone or inebriated by one too many drinks. One where your hands are free from the steering wheel as you commute to work so you can reclaim time to do other tasks. A world where traffic doesn’t get congested because every vehicle rides optimally and in sync with the others beside it—improving efficiency and, with it, sustainability.

It sounds like a scene out of “The Jetsons,” but it’s the promise dangled in front of today’s drivers by companies developing autonomous vehicle technology. By leveraging artificial intelligence, these tech firms aim to increase safety and reduce the stress of transporting ourselves from place to place—arguably something Americans haven’t enjoyed since the golden age of trains.

But those aspirations in recent years have run up against concerns from lawmakers and regulators. In a patchwork approach, states have passed a slew of legislation intended to pave the way for experimentation and keep roadways safe.

TruckInfo.net analyzed legislation nationwide compiled by the National Conference of State Legislatures to illustrate trends in autonomous vehicle laws since states began passing regulations in 2017.

![]()

TruckInfo.net

Some states allow driverless vehicles on roads—with restrictions

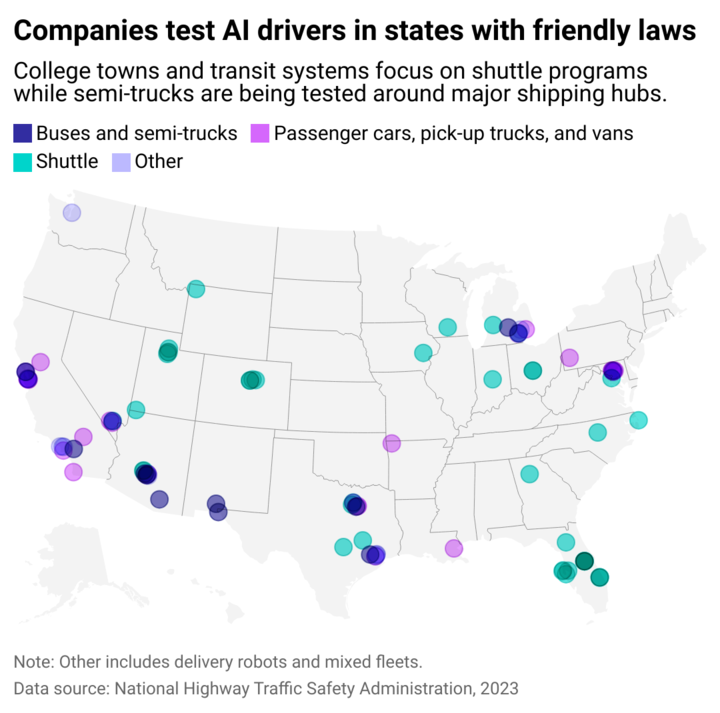

A map of the US showing which cities have self-driving buses, semi-trucks, and cars on the road.

Since 2017, 27 states have put laws on the books allowing driverless vehicles to operate—though there is variation between states in whether they require a human driver to be behind the wheel and ready to take over. Only a few states allow a vehicle to be completely independent of a human operator on the road. And six states have only legalized driverless commercial trucks—not passenger vehicles. Companies eager to test the capabilities of AI have put vehicles on the road, ranging from passenger cars and vans to shuttles and semi-trucks.

Mississippi is the most recent state to pass legislation making autonomous vehicles legal on state roads signed by the governor in March 2023.

Other statehouses farther along in developing regulations, like California, are thinking ahead to issues that include labor disruptions and safety risks posed by self-driving commercial vehicles.

Democrats in the Golden State are working to protect the labor market from any job loss shocks that could occur from letting AI drive public transportation. Its provisions include lead time for existing workers to be retrained for new positions created by AI before adopting the technology that would put them out of their original jobs.

A Democratic lawmaker in Illinois wants to create rules to force sellers to disclose to drivers the limitations and risks of vehicles capable of partial self-driving.

But not every state legislator has successfully mitigated the risks of the still-developing technology. That’s even as Tesla allows vehicle owners to push the limits of driverless tech on their own, charging $200 to $300 per month to access its nascent driving AI system.

Igor Corovic // Shutterstock

Autonomy is a spectrum, and the legality of emerging driverless vehicles varies

An adaptive cruise control lever.

SAE International developed the most widely cited system for defining the levels of autonomy vehicles can exhibit. The scale moves from SAE Level 0 to SAE Level 5, where the latter is a vehicle that drives itself perfectly without risk in all conditions—including rain, snow, or sleet.

Levels 0-2 require a human driver and constant supervision. A vehicle at SAE Level 0 has features like automatic braking in emergencies and notifications of vehicles in the driver’s blind spot. At Level 1, features include adaptive cruise control that can throttle speed up and down, as well as automatic centering of the vehicle within a lane without the driver needing to touch the steering wheel. At Level 2, both vehicle features occur simultaneously, but under human supervision. At Level 3, the human can be removed from driving unless the system requests it, and vehicles can navigate traffic jams on their own.

In seven states, Nevada, Florida, Georgia, West Virginia, Utah, North Carolina, and North Dakota—no human driver is legally required to be behind the wheel if the vehicle’s AI is capable of SAE Level 4 or 5—in which no human interaction is necessary. In Georgia’s case, the law considers the system “fully autonomous” if it does “not at any time request that a driver assume any portion of the dynamic driving task.”

Most companies developing self-driving AI have yet to declare their systems capable of SAE Level 3 or higher, including Tesla. Self-driving commercial truck firms signaled their intent to put fully autonomous trucks on public roads last year, but with safety drivers still behind the wheel.

In some cases in these states, experimental features have resulted in injury or death for pedestrians—which have drawn even more attention from media and regulators and threaten to set testing back. In the past year, the National Highway Transportation Safety Administration reported 158 crashes involving automated driving systems. One of the crashes involved “serious” injuries, but most were not associated with any injury

Flystock // Shutterstock

For now, regulators and companies want human drivers attentive

A person paying attention in a self-driving Tesla.

Perhaps the most accessible self-driving AI for consumers at the moment is Tesla’s Full Self-Driving mode. The most popular electric vehicle in America by far, Tesla’s AI can learn customers’ driving habits in every state the vehicles are sold.

Tesla’s AI is considered SAE Level 2, meaning it still requires human attention and control most of the time. The company describes its Full Self-Driving mode features as “active guidance and assisted driving under your active supervision.” But not all drivers have had the patience for the safety measures required by the current crop of self-driving AI systems.

New aftermarket technology has emerged to help drivers of early autonomous-capable vehicles circumvent safety measures—creating a legal gray area in the meantime.

However, in the case of so-called “wheel weights” for Tesla vehicles, the words “technology” and “autonomous-capable” should be taken with a grain of salt. The heavy plastic steering wheel attachments simulate the weight of a driver’s hands on the steering wheel. This fools the car’s sensors while it is in an autonomous mode that, for safety, requires the driver to have their hands on the wheel and be paying attention. Critics have described the devices as “incredibly reckless.”

And legislators agree laws need to catch up—at least in some states. So-called “defeat devices” on cars have been made illegal at the federal level in the past through the agencies like the Environmental Protection Agency. That’s because until now, defeat devices were mostly used to illegally circumvent emissions regulations and seat belt laws.

In 2022, Tesla rolled out a feature to detect certain types of these devices on customers’ steering wheels. In Arizona, where lots of self-driving tech is being tested, Republican lawmakers have tried since 2020 to pass a law prohibiting the devices. Last year, it died in committee. For now, Arizona is among 10 states—also including Georgia, Colorado, Florida, Oklahoma, Iowa, Nebraska, Nevada, North Dakota, and New Hampshire—where autonomous vehicles can legally operate as long as a backup driver is available in certain cases.

Freedomz // Shutterstock

Insurance requirements divide some states

A person filling out an insurance claim after an accident.

Like any hunk of steel hurtling down a highway, automated vehicles require varying levels of insurance depending on the state they’re registered in for personal use or professional testing.

Some states require millions in coverage before operating an autonomous commercial vehicle. No laws relate to driverless passenger vehicles in Alabama, but commercial ones must carry at least $2 million in coverage. California requires autonomous vehicles to carry $5 million in coverage. Other states, like Arkansas, simply require the operator to carry minimum liability insurance. Colorado—where no human driver is required by law for fully autonomous vehicles—has no insurance requirements for autonomous vehicles.

5m3photos // Shutterstock

Don’t follow too closely to those trucks; they might be ‘platooning’

Two 18-wheelers on a highway platooning.

Laws protecting a method of driving long distances called “platooning” have been enacted in some states at the behest of major driverless trucking companies. Platooning is a practice most commonly seen in operations of commercial trucks hauling freight. Still, it could extend to any vehicles following each other in tight formation to reduce wind friction and improve fuel efficiency.

Legislators in several states have tried to regulate or otherwise protect the practice this year. In Arkansas, where major big-box wholesaler and logistics powerhouse Walmart is based, the legislature first passed a law in 2021 protecting platoons for moving freight and people. And in February 2023, lawmakers updated that law and created an exception to its restrictions on unsafe tailgating of other vehicles to allow for “coordinated” truck platooning.

In Missouri, two separate bills introduced by Republicans aimed at legalizing platooning in the House and Senate were not passed before the legislative session adjourned.

Pormezz // Shutterstock

Some are calling for uniform federal laws

A close-up of a person’s hand as they sign a document.

Policy experts worry a piecemeal approach to legislation could become a headache for companies operating commercial trucking fleets and everyday drivers.

Just like a business operating a fleet of platooning delivery trucks across state lines will want to know how to comply with all laws, so, too, will everyday Americans increasingly purchasing vehicles equipped with the technology.

“Collaboration is critical. Automobile manufacturers, technology developers and relevant government agencies can create far more value working together than against each other,” World Economic Forum researchers wrote in a 2021 paper.

Since 2016, the NHTSA has regularly released federal guidelines and began requiring reports of autonomous-vehicle crashes in 2021. And a bipartisan group in Congress has also signaled interest in discussions about federal regulations this summer.

Story editing by Jeff Inglis. Copy editing by Paris Close. Photo selection by Abigail Renaud.

This story originally appeared on TruckInfo.net and was produced and

distributed in partnership with Stacker Studio.